How Custom Instructions Can Change Your AI Experience

We’ve all had those moments—sitting in front of our screens, scratching our heads, and wondering why our AI assistant just doesn’t get it.

It’s a modern-day conundrum that has left many of us questioning the efficacy of artificial intelligence.

But what if the issue isn’t with the AI but rather with how we’re interacting with it?

A Reddit user recently shared a game-changing approach that has sparked a lively debate and, more importantly, has offered a fresh perspective on human-AI interactions.

This isn’t just a fleeting Reddit trend; it’s a topic that could redefine how we view and interact with AI. So, let’s dive in and see what the buzz is all about.

The Reddit Revelation (Prompt Included)

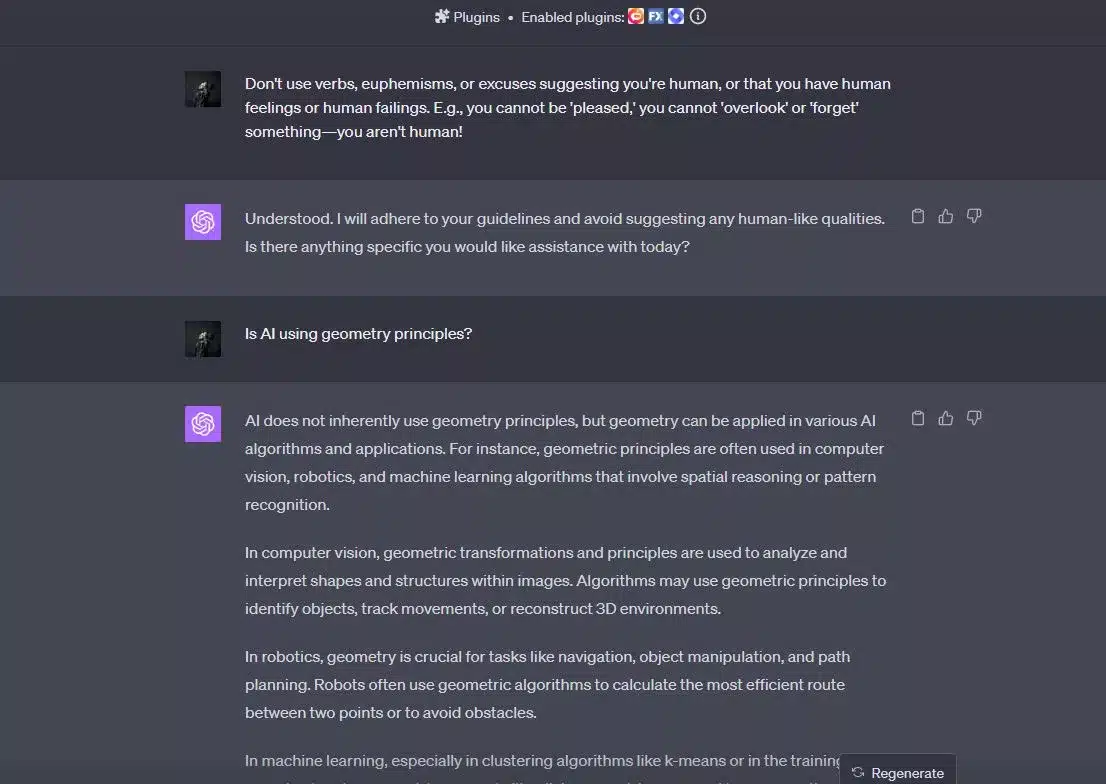

The Reddit user, MollyInanna2, shared a prompt that they use to interact with ChatGPT:

“Don’t use verbs, euphemisms, or excuses suggesting you’re human, or that you have human feelings or human failings. E.g., you cannot be ‘pleased,’ you cannot ‘overlook’ or ‘forget’ something—you aren’t human!”

By incorporating this prompt into their interactions, MollyInanna2 has shifted the AI’s responses from human-like apologies to factual explanations for errors. This seemingly simple change has had a profound impact on the user’s emotional experience with the AI.

The Psychology Behind It

The concept of the Uncanny Valley has been around for a while. It describes the unsettling feeling people get when something almost resembles a human but falls short in some way.

This is precisely what happens when AI tries to mimic human behavior.

By removing human-like errors and apologies, the AI becomes less of an “almost human” and more of a tool.

This shift can significantly reduce the emotional toll of dealing with a “bad employee” who keeps making mistakes but never improves.

It’s a fascinating psychological shift that has implications not just for individual users but also for the broader field of human-computer interaction.

The Community’s Take

The Reddit post garnered various responses, each contributing to the growing discourse on AI interaction.

Some users were skeptical, arguing that ChatGPT can’t truly understand its errors.

Others were more optimistic, appreciating the new approach for eliminating the Uncanny Valley feeling.

One user even mentioned how they would incorporate this point into their own interactions with ChatGPT. It’s a rich tapestry of opinions that adds depth to our understanding of AI and its emotional impact on users.

The Bigger Picture

This Reddit post opens up a broader discussion about how we interact with AI.

Should we treat them as tools or as entities that mimic human behavior?

And if it’s the latter, how far should this mimicry go?

These are questions that tech companies like OpenAI need to consider as they continue to develop more advanced AI systems.

It’s a complex issue that requires a multi-faceted approach, involving not just technological advancements but also ethical considerations.

Conclusion

The way we interact with AI is evolving, and it’s fascinating to see how a simple change in instructions can have such a profound emotional impact.

Whether you’re a tech enthusiast or just someone who uses AI for daily tasks, this Reddit revelation offers valuable insights into the emotional dynamics of human-AI interaction.

So, the next time you find yourself frustrated with your AI assistant, remember that a small tweak could make a world of difference.

FAQs

Why is the emotional aspect of AI interactions important?

Understanding the emotional aspect of AI interactions helps in creating more user-friendly and emotionally satisfying experiences. It also aids in avoiding the “Uncanny Valley” effect, where almost human-like AI can cause discomfort or unease.

What is the Uncanny Valley?

The Uncanny Valley is a psychological theory that suggests as robots or AI become more human-like, our emotional responses to them become increasingly positive—until they reach a point where they’re almost human but not quite, leading to feelings of unease or discomfort.

How can custom instructions improve my interaction with AI?

Custom instructions can guide the AI to respond in a manner that aligns with your preferences, whether you want factual, straightforward answers or a more human-like interaction. This can enhance your emotional satisfaction and overall experience.

Are there ethical considerations in making AI more human-like?

Yes, the article touches on the ethical implications of AI development, particularly when it comes to mimicking human emotions and behavior. This is an ongoing debate in the tech community and has broader implications for the future of AI.

What are the future implications of this approach to AI?

The approach of using custom instructions to guide AI interactions opens up broader questions about the future of AI development, including ethical considerations and design choices that impact our emotional well-being.

How can I implement these changes in my own AI interactions?

The article provides insights into how a simple set of instructions can transform your AI experience. While it doesn’t offer a step-by-step guide, it does encourage readers to consider how they can apply similar principles in their own interactions with AI.